Teleport to Anywhere with a Single Click

University of Pennsylvania

ESE 350: Embedded Systems/Microcontroller Laboratory

Yixuan Geng, Yifan Xu

Table of Contents

Abstract …………………………………………………………………………...1

Introduction ……………………………………………………………………..1

Eye Detecting …………………………………………………………………...3

Explore …………………………………………………………………….3

Solution …………………………………………………………………..6

IMU Fusion ………………………………………………………………………..9

Hardware ………………………………………………………………...9

Software …………………………………………………………………..10

Robot Design ……………………………………………………………………..10

Mechanical ……………………………………………………………….10

Electrical ………………………………………………………………….. 12

Software …………………………………………………………………...13

Video Streaming ………………………………………………………………….13

Hardware …………………………………………………………………..13

Software …………………………………………………………………….14

Testing and Evaluation ………………………………………………………...14

Conclusion …………………………………………………………………………...15

Abstract

Yi is a remote robot that can provide people with a truly immersive way to see the world through the eyes of Yi. Our motivation is to help people with movement difficulties (eg. patients, elderly, etc.) a means of exploring the outside world.

Yi has two separate parts connected through the internet. The first part is the robot part. It is controlled by several servo motors that can mimic the movements of a persons head and eyes. It also has two cameras that can stream 3-d video back to the user. The second part is the control and display part. We are planning to use EOG techniques or IR eye tracking techniques or capture the movement eyes and an IMU to capture the movement of the user head. The inputs are used to determine the orientation and focus of the robot and its cameras. Also a 2-screen display is used to display the camera video.

Introduction

Goal of the Project

To build a remote robot and head mounted display system that can provide users with a truly immersive way to see the world through the eyes of the remote robot.

Potential Uses:

- Immobile people can use Yi to explore the outside world without leaving their bed or wheelchair.

- Can be used for improving user experience of teleconferences.

- Can be used for people to explore hazardous places.

Overall architecture

Head-mounted display and sensor system

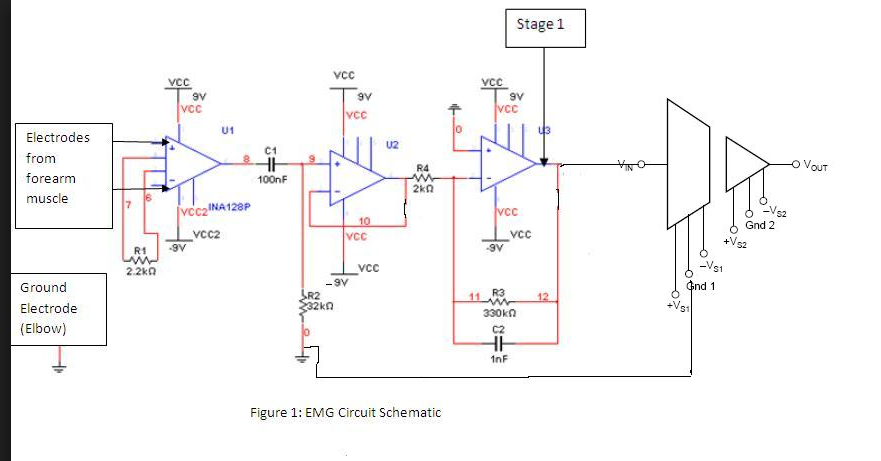

The user will be using a Head-mounted display and sensor system to see through the cameras of the remote robot and provide commands through the movement of head and eye. The display will use oculus-like headsets (We will not be focusing on the display at this stage). To track head movement, we will use an IMU and mount it on the headset. To track eye ball orientation, we will use EOG (Electrooculography) technique. We will have various electrodes that are placed around the eye to detect the change of electric field as the eye moves. We will also design our own filter and amplification circuit. We will use Raspberry Pi to process sensory information and send the orientation information to the other Raspberry Pi that are on the remote robot through Wifi.

Remote robot

The remote robot will have 9 servos in total. A Raspberry Pi with Wifi module and two camera module will also be used. The first three servos will be used to control the row, pitch, and yaw of the robot main frame based on the orientation of the user’s head. The cameras will function as the users remote ‘eyes’, and will be controlled by 3 servos each. Two servos correspond to the pitch and yaw of the eye. The third servo will be used to adjust the focus of the camera based on the calculated focus lens of the users eyes through their orientation. This is important if the user is trying to focus on a near object. We are using two cameras to provide separate videos to each eye to generate 3-D effects, which we will explore further after the mechanical, sensory, and controls work well.

Process:

The device will have a process seen in the following block diagram:

Eye Orientation Detection

For eye orientation detection, there are multiple possible methods including EOG, infra-red reflection, and image processing. We choose EOG as our detection technique because compared to the other two technique, EOG doesn’t require a device in front of the eye.

EOG stands for Electrooculography. This technique is based on the fact that eye balls have electrical polarity. The retina has a more positive charge than corneal. So eye movement will result in a change in the nearby electric field. If we place electrodes around the eye, the electrode voltage readings will vary corresponding to the eye movement.

Explore: Signal Processing with High-Pass-Filter

The signal will first go through a high pass filter that filters out the drifting component of the signal. Then it goes through a buffer, a second stage low pass filter at 16Hz and finally a Twin-T notch filter at 60Hz.

Interesting thing about this circuit is that the drifting of the voltage level, which was a major problem for my previous circuit, is now gone. The voltage level will always stay around 0V (or any voltage I want), which is great for microcontroller sampling because it never goes out of the range of 0-5V. The downside of the circuit is that when the signal stays at some voltage level (e.g. when the user is staring at some angle), the output voltage decays to zero within maybe 100ms (half period depends on circuit design). So I would need to use some algorithm to recover the signal.

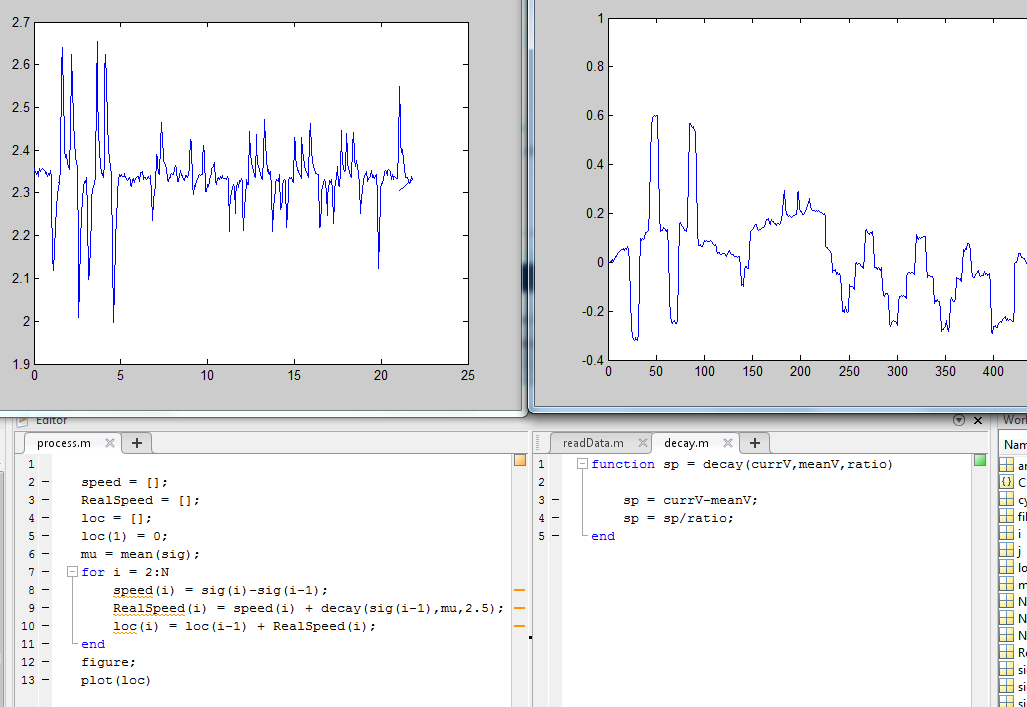

The up-left graph is the Vout of the circuit. When I roll my eyes to the right, Vout goes up. If we zoom in, we will see that the signal decays exponentially to the reference point after each peak, which means that at "free fall" (when Vin doesn't change), dVout/dt = C0 * (Vout - C1), where C0 is a constant that can be measured, and C1 is equal to the bias voltage I set. So in order to recover the signal, I can calculate dVout/dt, add back the "free-fall decay speed" of C0 * (Vout-C1) to get dVin/dt, and then do integral of dVin/dt to get the orientation of the eye. The up-right graph is the Vin recovered by algorithm above.

However, the algorithm doesn't work as nicely as above every time. See the following as an example:

The problem is that there's still the annoying drifting. Over about 10 seconds the random drift could account for as much as 50% of the output, which means that if the output changes by 100, the actual signal could have changed by any number between 50 to 100. This accuracy is unacceptable!

Also, there's the problem of choosing C1 (the reference voltage). Since there's the integral step in my algorithm, changing C1 by 0.01V would cause a massive drift of the output. It becomes really hard to let the micro-controller set the reference voltage correctly in the algorithm when it's real-time. How do i get that reference voltage, which is supposed to be constant but is actually different across different tests for unknown reasons? Average algorithm would be problematic when the signal is not symmetric.

So I decided to give up this circuit! This theory is beautiful, but there's always the distance between theory and reality.

Solution: Signal Processing without High-Pass-Filter

New circuit:

Not so different. Just that the high-pass filter and the buffer are gone. I tried this circuit and found that the drifting became really small in short period of time. Why much smaller than the circuit I used two weeks ago? I guess on that circuit I had too many cascaded RC low-pass filters, and they were cascaded without any buffer in between, so the cut-off frequency was actually much lower than what I calculated.(lowered to around 4Hz instead of the 16Hz that I intended) This would cause the drift to be relatively bigger than the EOG signal since drifting usually has a much lower frequency. And when I had 10 RC LPF cascaded like that, it's no surprise that I got a crazy 5V drift when the EOG signal is just about 200mV. With the new circuit, the cut-off freq stays at 16Hz. As a result, the drift and the EOG signal are "treated" quite equally. Over about a minute the drift could be around 0.1V - 0.2V when the signal is at 0.5V.

Although the drifting problem is bigger for this circuit than the previous one, the short-term performance is definitely better for this circuit. Check out this experiment for linear relation between EOG signal and eye movement below. The user’s eye will focus on the five colorful points in the graph below alternatively. From the signal on the oscilloscope we see that there is a linear relation between the EOG signal and the eye orientation. Also we see that the short-term accuracy of the system is good.

This is our prototype circuit:

We wrap up our circuit (two layers, one for horizontal eye movement and the other for vertical):

IMU Fusion

This is our final IMU setup. It would be placed on top of user’s head, leaning to the back at about 45 degrees.

Hardware:

We are using LSM9DS0 as our IMU chip. This chip includes a 3D digital linear acceleration sensor, a 3D digital angular rate sensor, and a 3D digital magnetic sensor. The chip is connected to an mbed via I2C, as shown above, for data processing. The data from eye detection circuit will also be sent to this mbed. This mbed will package these data and send it to a raspberry pi for communication with the robot. The connection between mbed and LSM9DS0 is as follows:

Software

For the purpose of the project, we need to get real time data of pitch and yaw of the user’s head. For pitch (vertical head rotation), we rely on accelerometer and gyroscope. We use a kalman filter to filter out the noise as much as possible.

Robot Design

Mechanical

We are using 6 Servos to control the robot body and onboard cameras. Two normal size servos (Power HD 6001B) are used to control to the pitch and yaw of the robot body frame. Four micro servos(Tower Pro SG5R) are used to control the pitch and yaw of the two cameras, two servos for each camera. The overall design can be seen in the following graph:

The first normal size servo’s horn are attached to the bottom acrylic sheet, with its base attached to the second acrylic sheet. In this way, when the servo horn rotates, the body of the whole robot also rotates, corresponding to the yaw of the head. The second normal size servo is attached to the two vertical acrylic sheets, with the camera part attached to the sides. Thus when it rotates, the camera part also rotates, corresponding to the pitch of the head.

For each of the cameras, the second micro servo is attached to the first micro servo, while the first micro servo is attached to the whole camera part supporting acrylic. The cameras are attached to the second servo horn. With such design, we can keep the center of the camera fixed while changing the yaw and pitch of the camera.

Finally, two supporting vertical acrylic sheets are added to hold the two raspberry pis used for video streaming, data processing, and servo control.

Electrical

We will be using two raspberry pi in our robot, one for each pi camera. On the first pi, we will also be performing tasks such as communication between the sensing part and servo control. These are shown in the following image:

We are using a dedicated PWM control circuit (Adafruit 16-Channel 12-bit PWM/Servo Driver - I2C interface - PCA9685) for accurate servo control. As can be seen from the image above, the servos are connected to pin 0,1,7,8,14,15 of the PWM Driver. Power is supplied through an external power supply and is connected to the black and red wire. Connection instructions between raspberry pi and the PWM Driver can be found in this online tutorial by adafruit (https://learn.adafruit.com/adafruit-16-channel-servo-driver-with-raspberry-pi).

The raspberry pi are powered by a micro USB cable and internet connection is provided through the on board ethernet port. The pi camera is directly connected to the onboard camera port.

Software

For PWM Driver, we will be using Adafruit_PWM_Servo_Driver Library. Through the library, we can specify port, frequency, duty-cycle, and polarity. In Adafruit_PWM_Servo_Driver.py, we initiated 6 ports for the 6 servos, each starting at middle point. The duty-cycle, which corresponds to the angles of each servo, is updated from the information from the sensing part. Communication is performed through TCP/IP Protocol, using standard python socket library.

After we receive the angles from the sensing part, we applied a 5 data point moving average filter to smooth out the data. We also implemented a maximum and minimum for each angle, as well as a deadzone to deal with servo shaking. We are using MJPGStreamer for video streaming, which will be explained in detail in the next section.

Video Streaming

Hardware

We will be using Pi camera Rev 1.3 to perform video streaming. The camera is connected directly to the camera port on the raspberry pi. The connections can be seen in the following graph:

Software

We will be using an open source project called MJPGStreamer to interface with the camera module and stream the video through http. The setup code can be found in the cam_setup file:

cd /usr/src

cd mjpg-streamer

cd mjpg-streamer

cd mjpg-streamer-experimental

export LD_LIBRARY_PATH=.

./mjpg_streamer -o "output_http.so -w ./www" -i "input_raspicam.so -x 640 -y 480 -fps 20"

Resolution and fps can be changed by editing the bolded part of the cam_setup file. Also, night version can also be enabled by adding “-night” after the “-fps 20”.

Testing and Evaluation

We tested each part individually, and then constructed overall tests of the whole system. For eye detection, after we constructed the circuit, test of each component was performed through an oscilloscope to ensure each component is working fine. For the IMU, we conducted tests by rotating each euler angle individually. We’ve found that the fusion software we were using was not accurate in a sense that yaw output changes with pitch. We are planning to investigate into this issue during summer break. The servos were tested individually to find the midpoint and duty-cycle per degree. Before connecting with the sensing part, the robot was tested by manually inputting angles. The cameras are tested using the online MJPGStreamer.

Finally, after each individual part is working properly, the robot and the sensing part are connected through TCP/IP Protocol. The sensing part are sending angles of user head and eye orientation to to the robot, and after a moving average are applied, these angles are mapped to the servos. When tested together, these parts worked properly together, thus meaning that we have successfully created a telepresence robot that is controlled directly by user’s head and eye movements. To look at something else, the user can simply turn his/her head or roll his/her eyes. This is a very intuitive control mechanism, and can provide users with a truly immersive experience. However, there is still more work to do. First, the servos are sometimes shaking. Second, we haven’t constructed an accurate model for EOG. this the angles outputted are not very accurate. Finally, our IMU fusion program haven’t been calibrated.

Conclusion

After 5 weeks, we successfully built the head-eye orientation detection system and a robot that moves two cameras correspondingly and stream the video over the internet. We have tested the entire system for stability issues for more than 100 times and it proved that the system is highly reliable within a short period time (usually 30 seconds) after system reset. After that short period the system might not work properly due to the complex relation between EOG signal and users’ metabolism. We will do further experiments to figure that out.